The Center for Exascale Simulation of Materials in Extreme Environments (CESMIX) seeks to advance the state-of-the-art in predictive simulation by connecting quantum and molecular simulations of materials with state-of-the-art programming languages, compiler technologies, and software performance engineering tools, underpinned by rigorous approaches to statistical inference and uncertainty quantification. Our motivating problem is to predict the degradation of complex (disordered and multi-component) materials under extreme loading, inaccessible to direct experimental observation. This application represents a technology domain of intense current interest, and exemplifies an important class of scientific problems - involving material interfaces in extreme environments.

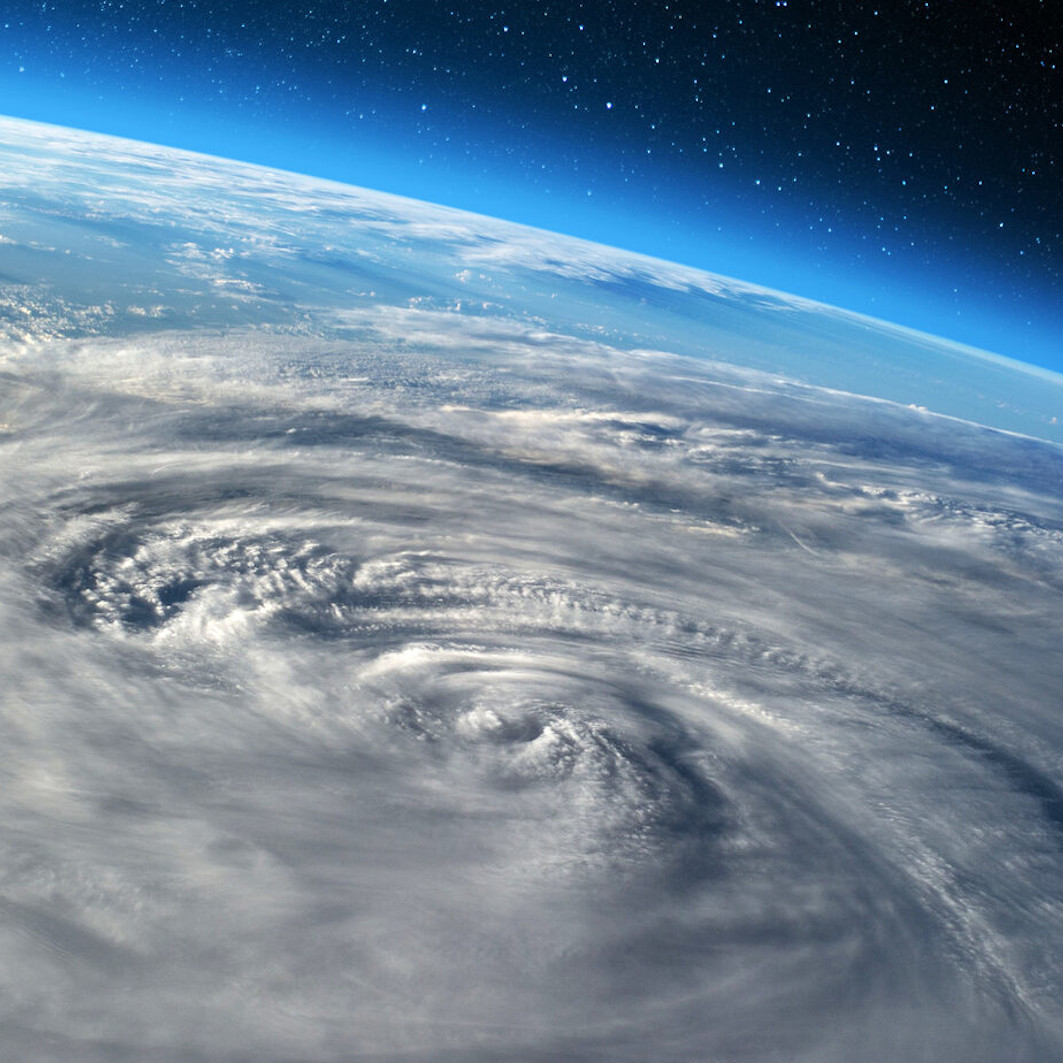

Website We know that climate change is poised to reshape our world, but we lack clear enough predictions about precisely how. At CliMA, our mission is to provide the accurate and actionable scientific information needed to face the coming changes—to mitigate what is avoidable, and to adapt to what is not. We want to provide the predictions necessary to plan resilient infrastructure, adapt supply chains, devise efficient climate change mitigation policies, and assess the risks of climate-related hazards to vulnerable communities.

Website

Anthropogenic climate change will reshape our world, but we lack sufficiently accurate and useful projections of how. The goal of this grand challenge is to provide accurate and actionable scientific information to decision-makers to inform the most effective mitigation and adaptation strategies. We envision a novel platform that leapfrogs existing climate decision support tools by leveraging advances in computational and data sciences to improve the accuracy of climate models, quantify their uncertainty, and addresses the trade-off between performance and computation time with attention to industry and government stakeholder needs. First, we will develop a digital twin of the Earth that harnesses more data than ever before to reduce and quantify uncertainties in climate projections. To serve the needs of stakeholders, we will develop emulators of the digital twin tailored to maintain the highest possible accuracy in predicting specific variables, like droughts, floods, or heat waves, while still being easy and fast to run.

Website Scientific machine learning and differentiable programming has shown a tremendous ability to accelerate scientific simulations and improve the accuracy of models. However, many of the demonstrations have not bubbled up to large-scale scientific infrustructure used in practice. The purpose of this project is to demonstrate SciML and differentiable programming tooling on a full climate model. By using the MIT-CalTech CLIMA climate model as a base, we plan to demonstrate neural parameterizations of ocean models and probabilistic programming for uncertainty quantification on the full-scale Earth system model. This will be fostered by new differentiable programming tools, Diffractor.jl and Enzyme.jl, specializing in high-performance construction of adjoint equations for general purpose code.

Website With ever increasing numbers of satellites in the night sky due to projects like Starlink, proper RFI cancellation is required in order for the future of ground-based radio interferomtry to continue taking accurate images of the night's sky. This project is developing tools for SciML-based adaptive cancellation via continuous-time echo state networks, along with new tooling for convex optimization.

Website

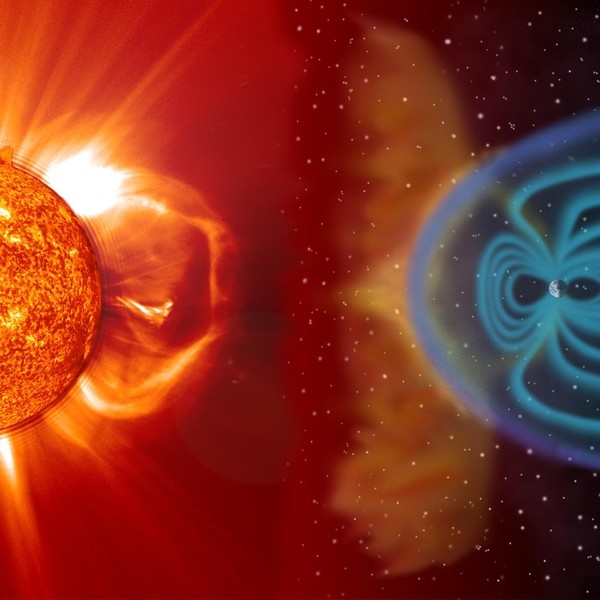

The forecasting and reanalysis of space weather events poses a major challenge for many activities in space and beyond. For example, solar storms can damage the power supply infrastructure and computer networks on earth but also impact crucially space exploration and satellite traffic management. As a consequence, improved space weather prediction capabilities can unlock to tremendous societal and economic opportunities such as improved internet coverage via satellite mega-constellations. This multi-disciplinary project brings together teams from various institutions and fields to extend the computational capabilities in the realm of space weather modeling. As part of a larger NSF and NASA initiative motivated by the White House National Space Weather Strategy and Action Plan, the main objective of this project is to build a Julia-based, composable, sustainable, and portable open-source software framework for the model-based analysis of space weather. To that end, the project seeks to develop scalable algorithms for simulation of large-scale, high-fidelity models, reduced order modeling, data assimilation, and uncertainty propagation. These algorithms are then integrated and distributed to the wider space weather modeling community in form of a composable software framework build in Julia.

Website Mathematical modeling of infectious disease transmission is an important tool in forecasting future trends of pandemics, such as COVID-19, and in evaluating "what if" projections of different interventions. Yet, different models tend to give different results. To draw robust conclusions from modeling, it is important to consider multiple models, which can be facilitated by expanding the modeling community, as models tend to reflect the people that develop them, and by bringing models and model developers together to compare and contrast models. The aims of this project are: to expand and empower the next generation of models and modelers; to act as an incubator for a platform for comparisons of infectious disease models, both in terms of computers and people; and hence enable swift and more robust policy decisions for current and future pandemics.

Website The chemical equations for predicting battery performance are difficult to solve, and thus scientists commonly use many approximations to receive solvable models. However, these approximations can cause loss of predictive accuracy in extreme scenarios, such as in cold weather, which then causes poor performance of simluation-designed battery materials in real-world scenarios. The Julia Lab is developing neural PDE approaches for mixing data with partial differential equations in physics-informed neural networks (PINNs) to improve the predictive performance. The tools from this project are open sourced as the NeuralPDE.jl repository.

Website Airborne magnetic anomaly navigation (MagNav) is a GPS alternative that uses the Earth’s magnetic anomaly field, which is largely known and unchanging, to navigate. Some of the current problems with MagNav involve 1) reducing excess noise in the system, such as the magnetic field from the aircraft itself, 2) determining position in real-time, and 3) combining with other systems to present a full-alternative GPS system. The present project investigates using scientific machine learning (SciML) to solve MagNav shortcomings and provide a viable alternative to GPS.

Website In conjunction with Julia Computing, Inc., the lab is developing a neural component machine learning tool to reduce the total energy consumption of heating, ventilation, and air conditioning (HVAC) systems in buildings. As of 2012, buildings consume 40 percent of the nation's primary energy, with HVAC systems comprising a significant portion of this consumption. It has been demonstrated that the use of modeling and simulation tools in the design of a building can yield significant energy savings, up to 27 percent of total energy consumption. However, these simulation tools are still too slow to be practically useful. Julia Computing with the Julia Lab seeks to improve upon these tools using the latest computing and mathematical technologies in differentiable programming and composable software to enhance the ability of engineers to design more energy efficient buildings.

Website This project involves software development efforts for accelerated solution of differential and algebraic equations describing the kinetics of the electrochemical systems, integration of these solvers with machine learning approaches, and global optimization over the chemical design space. The high-value candidates will be tested experimentally, validating the entire approach. We are generating cell-scale battery models from porous-electrode theory (such as the P2D/DFN model) from PyBaMM in ModelingToolkit.jl to leverage the simulating power of the SciML ecosystem - either using Method of Lines (finite differences then ODE/DAE solvers) or by surrogatization with Physics-Informed Neural Networks. We will also develop hybrid models that directly incorporate experimental data into the models.

Website Affordable and clean energy for everyone, and actions for an improved climate, are two of United Nations Sustainable Development Goals. Any realistic energy solution must combine the entire portfolio of possible energy sources with a gradual transition from the current fossil based economy to a fully sustainable energy economy. To improve the climate in this transition, energy efficiency in oil production must be maximized together with profit. The project aims at developing new methods, algorithms, and tools for oil production with maximized profit and minimum energy consumption under uncertain information. Uncertainties relate to (i) unknown future prices/cost, (ii) uncertainties in operational allocation, and (iii) uncertain knowledge of reservoir and equipment.

Website With the growing penetration of wind, solar, and storage technologies, all interfaced via fast-acting power electronic converters (PEC), the complexity of the dynamic behavior of large-scale power systems is increasing by orders of magnitude. Recent research indicates that the simulations that are the foundation of power system planning and operations decisions must also evolve. This project aims to develop new tools at the intersection of scientific machine learning (SciML) and power systems engineering. The project is grounded in emerging tools to accelerate dynamical system simulations with surrogates and approximations.

Website Universal differential equations have been shown to be an effective method for the automated discovery of mechanistic models for understanding systems and predicting their behaviors. However, a crucial question that must be addressed in any scientific endevour is uncertainty quantification. Here we are looking into aspects such as the data requirements for successful UDE discovery of equations, the robustness of neural network trained differential equations, the predictive power of UDEs and neural ODEs, and more. This is all done in tandem with Sandia National Labs on the topic of epdemic modeling.

Website Software stacks come with many tradeoffs; a productive environment using Python is slow, C is portable to everything but is a bug-rich environment, and assembly can give you maximum performance combined with a development experience expected of the 1930s. Julia is changing the game to provide a language operating at the intersection of the "three P's": performance, productivity, and portability. The PAPPA project is focused on building out the ecosystem around Julia to ensure that users can easily write correct code once, and which runs everywhere with breakneck performance. Tantamount to this goal is building out packages such as Dagger.jl and KernelAbstractions.jl, which provide modern interfaces for executing code on any device, at any scale.

Website Every disease that can be solved by identifying the one gene change has essentially been solved. Every mental health disorder that is still undertreated requires understanding the system as a whole and building solutions that treat the system as a complex interaction of many parts. The tool that will enable the discovery of such complicated targeting strategies is Neuroblox. Neuroblox allows for simulation and estimation of the system level properties of higher order brain properties to discover accurate biomarkers for mental health disorders, along with the design of experiments to allow for the targeted creation of treatments.

Website Pharmacometrics and its subfields (quantitative systems pharmacology (QSP), physiologically-based pharmacokinetics (PBPK), and pharmacokinetics/pharmacodynamics (Pk/pd)) are biochemical differential equation models with an attached statistical model. These models live in a regime of differential equations which are highly stiff, often times small (<100 ODEs), where asymtopic performance considerations do not account for the full description of the mathematical problem. This work involves the acceleration of differential equation solving on these systems, along with improvements to sensitivity analysis of small stiff biochemical networks, SciML automated discovery of nonlinear mixed effects models (NLME), surrogatization of larger QSP models, and more.

Website